workshop_london_nlp_2023

Workshop London Text Anlaysis 2023: Introduction to transformer models

Sildes

Notebooks

1.: Introduction to transformers

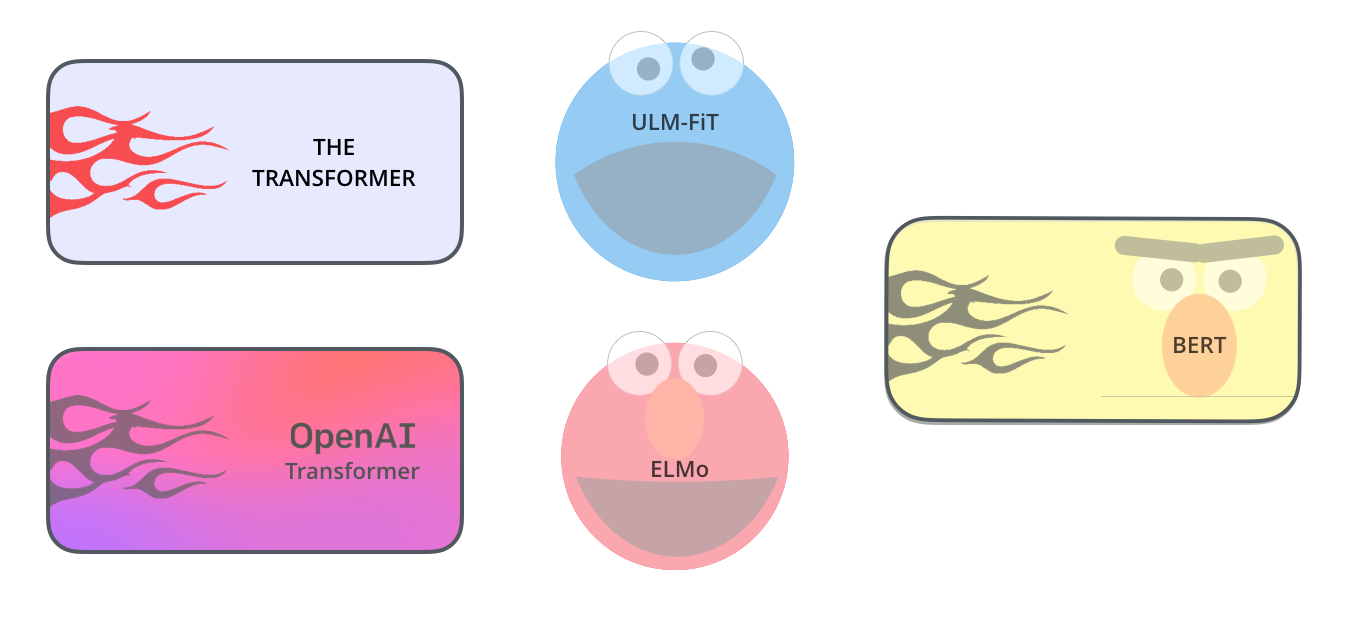

BERT, introduced by researchers at Google in 2018, is a powerful language model that uses transformer architecture. Pushing the boundaries of earlier model architecture, such as LSTM and GRU, that were either unidirectional or sequentially bi-directional, BERT considers context from both past and future simultaneously. This is due to the innovative “attention mechanism,” which allows the model to weigh the importance of words in a sentence when generating representations.

In this notebook, we will practice the use of BERT for diffeent tasks. Then, we will take a look under the hood and investigate the architecture a bit.

2.: SBERT and Semantic search

Sentence Transformers are a recent breakthrough in natural language processing that can generate dense, high-quality embeddings of sentences, enabling accurate semantic similarity comparisons between sentences. What makes them particularly exciting for businesses and social science applications is their ability to enable more intuitive, meaningful language-based search, content deduplication, and clustering. With Sentence Transformers, businesses can enhance the accuracy of their search engines, provide more accurate recommendations, and reduce redundancy in content databases. Social science researchers can use Sentence Transformers to identify commonalities between texts and to cluster documents to identify trends and topics in large corpora.

In this notebook, we will practice the use of SBERT mainly for semantic search and semantic similarity tasks.

2.: LLMs and agents

Inm this notebook, we will play around a bit with powerful pipelines to:

- Create vectordatabases

- Perform semanti search over these databases

- Query LMMs

- Performing Retrival augmented use of LMMs

- Using agents to query databases

To follow along create an OpenAI API key and put 1$ on it :)

Own work and ressources

Publications, Reports & PReprints using Deep NLP

- Hain, D. S., Jurowetzki, R., Squicciarini, M., & Xu, L. (2023). Unveiling the Neurotechnology Landscape: Scientific Advancements Innovations and Major Trends. UNESCO Report

- Hain, D., Jurowetzki, R., Lee, S., & Zhou, Y. (2023). Machine learning and artificial intelligence for science, technology, innovation mapping and forecasting: Review, synthesis, and applications. Scientometrics, 128(3), 1465-1472.

- Hain, D., Jurowetzki, R., & Squicciarini, M. (2022). Mapping Complex Technologies via Science-Technology Linkages; The Case of Neuroscience–A transformer based keyword extraction approach. arXiv preprint arXiv:2205.10153.

- Bekamiri, H., Hain, D. S., & Jurowetzki, R. (2022). A survey on sentence embedding models performance for patent analysis. arXiv preprint arXiv:2206.02690.

- Hain, D. S., Jurowetzki, R., Buchmann, T., & Wolf, P. (2022). A text-embedding-based approach to measuring patent-to-patent technological similarity. Technological Forecasting and Social Change, 177, 121559.

- Bekamiri, H., Hain, D. S., & Jurowetzki, R. (2021). Patentsberta: A deep nlp based hybrid model for patent distance and classification using augmented sbert. arXiv preprint arXiv:2103.11933.

- Hain, D. S., Jurowetzki, R., Konda, P., & Oehler, L. (2020). From catching up to industrial leadership: towards an integrated market-technology perspective. An application of semantic patent-to-patent similarity in the wind and EV sector. Industrial and Corporate Change, 29(5), 1233-1255.

- Rakas, M., & Hain, D. S. (2019). The state of innovation system research: What happens beneath the surface?. Research Policy, 48(9), 103787. - Some NLP but not deep, though

More from us

Further Literature and Ressources

Transformer models generally

- Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

Sbert

- OG SBERT-Paper Reimers, N., & Gurevych, I. (2019). Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv preprint arXiv:1908.10084.

- SBERT Docu

- NLP with SBERT - an ebook/course on the use of dense vectors (with SBERT for business applications)

- SBERT-Training Tutorial

- BERTopic - a framework for topic modelling with SBERT embeddings